As a judge and homebrewer for all these years, I have come across a wide variety of scoresheets for evaluating beers. Some concentrate only on the statistics, since they belong to pro competitions where judges must evaluate beers quickly and feedback is limited to off-flavors.

While some employ metrics that differ from the BJCP scoresheet, the beer characteristics evaluated and the numerical weights assigned to each are relatively close to the BJCP metric. Generally speaking, the area with the highest point total is flavor, and the lowest is appearance.

But when it comes to structured feedback, I firmly believe that the BJCP scoresheet remains the finest available. Judges can easily follow the schematic guidance provided on the scoresheet, even if they are unfamiliar with it. In the same way, homebrewers can easily understand the rationale behind the scores judges assign to their beers just by reading the scoresheet.

That being said, not much has changed with the scoresheet since I began using it as a judge in 2017. I believe that it could be improved with a few adjustments. Here are a few ideas.

The beer decriptors checkbox

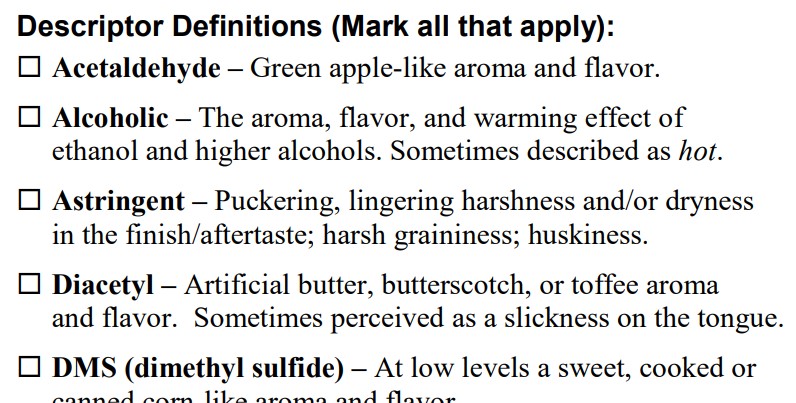

The beer descriptors on the left side of the scoresheet are intended to be just that—descriptors—as all BJCP judges should know. They are more than a collection of off-flavors; they should be highlighted anytime a judge finds one of these peculiar characteristics in the beer.

That is to say, even if a spicy Belgian beer has a pleasant clove-like aroma that is consistent with the style, the phenolic box should still be checked.

As goes with my personal experience as a judge, an exam administrator, and a grader, this could be confusing, especially for new judges. Forgetting to highlight a descriptor is fairly common if the attribute is not judged as an off-flavour in the beer.

Both the judge and the brewer would benefit from turning this column into a “defects-only” list.

Even more so considering that, as a collection of general descriptors, it is already lacking. What if a beer has floral aromas? Where are the malt descriptors? What if the beer has some minerality? I don’t think that an uncomplete list of beer attributes is of much use to anybody.

With a little tweak and rewording, the checklist might be far more helpful as a list of off-flavors.

For instance, listing DMS and Vegetal in two different lines is not ideal. They both refer to sulfur aromas; in most cases, the cause is the same compound: DMS.

Vegetal is also a misleading term. I stumbled upon a few scoresheets where the Vegetal checkbox was highlighted, but the judge intended it as a red-pepper-like quality. I would go with a generic “cooked vegetables” checkbox and put DMS in the description together with the other vegetables already listed.

As a second example that comes to mind, I would add the fresh onion/garlic descriptors to the sulfur checkbox. They are common off-flavours produced by the sulfur aroma in the hops that don’t have a place in the current version of the scoresheet.

These are just some examples that crossed my mind, but I am sure that the list could be easily improved as an off-flavour list.

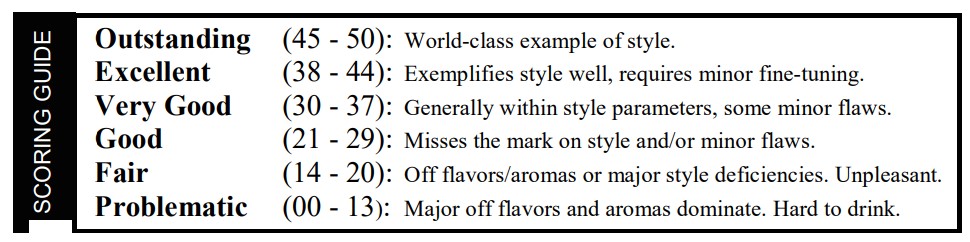

The scoring guide

Let’s be honest, a beer that receives a score below 20 cannot be considered fair. This stands in stark contrast to the scoring range’s “unpleasant” attribute that follows in the description. How is it possible for a beer to be both fair and unpleasant?

Similar reasoning for a beer that scores in the high 20s. It is, at most, an OK beer rather than a good beer. Perhaps a fair one.

Considering that the bottom range (the problematic one) is seldomly used, even for really bad beers, I think that the whole scoring guide should be reviewed.

I totally agree with the polite approach, but to an extent. Being as it is, this wording can generate some confusion.

It could go something like this:

- 0-13 -> Unpleasant

- 14-20 -> Problematic

- 21-29 -> Fair

- 30-37 -> Good

- 38-44 -> Excellent

- 45-50 -> Outstanding

Writing space

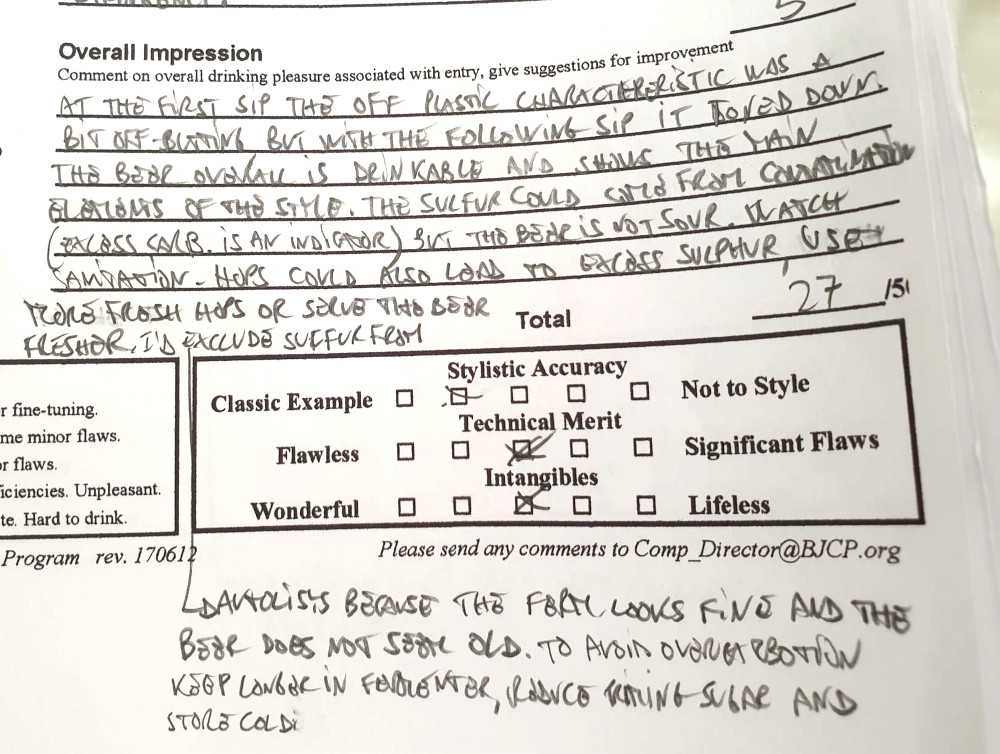

Problematic beers need in-depth assessments. It is challenging to sum up the overall impression in the six lines given, particularly when the beer has two or three off-flavours and significant style flaws.

I frequently find myself writing in areas other than those designated for the Overall comments, causing the scoresheet to become a chaotic jumble of words that spreads in all directions.

A similar argument can be made for the flavour section, where judges must evaluate every aspect of the beer’s flavour, including the aromas in the aftertaste, the balance of the beer, the taste of the malt, hops, fermentation (including phenols and esters in some styles), and any potential off-flavors.

In contrast, mouthfeel usually requires fewer words to be assessed sufficiently. The judge would have more area to address beer defects and style dificiencies, as well as potential solutions, in the Overall part if a line were moved from the Mouthfeel to the Overall section.

Extending the scoresheet slightly at the bottom, where there is a lot of blank space utilized for the bottom margin, would allow for an extra line to be used in the Flavour or Overall sections.

If expanding the bottom isn’t an option, the paragraph under “bottle inspection” at the top of the scoresheet could be removed. Since it doesn’t affect the score and isn’t utilized very often, I don’t think it’s really useful.

Providing comprehensive and in-depth feedback is the primary function of the BJCP scoresheets, so having additional space might be quite beneficial.

Electronic scoresheets

I am all in for digitalization. These days, I hardly print anything out. Thus, in theory, having an electronic scoresheet is an appealing idea. But we should consider the big picture here. Let me expand on that.

This is a topic that regularly pops up in the BJCP Facebook group. Many issues would be resolved by electronic scoresheets, including hard-to-read handwriting, sore hands from writing the scoresheet, human mistakes in score calculation, and far faster scoresheet processing.

There are many excellent software packages available that are leading contenders for automating the beer judges’ tasks. However, high-quality software alone cannot solve this issue.

A few years ago, I had the pleasure of serving as a judge for a pro-beer awarding competition in Spain, which made use of the commercial BAP (Beer Awards Platform) software. All in all, it was a fantastic experience.

I forgot to bring a Schuko adapter with me for my laptop power supply. I found that out at the last moment, when the first judging session was about to start. Nobody could provide a suitable adapter, so I started filling in the electronic scoresheets on my laptop with just the battery as a power supply.

While I was judging, I almost ran out of electricity. Fortunately, I was able to borrow the same power supply from another judge.

Although it might seem like a trivial issue, dealing with technology can be challenging. During one of the most recent conversations on this subject on the BJCP international Facebook page, UK judge Sarah Pantry brilliantly pointed it out (link).

The incident with my adapter seems insignificant in comparison to the numerous technological problems that could arise during a competition: defective Wi-Fi, troubles with local area networks (LANs), general connectivity problems, and laptops that might completely stop operating. For larger professional competitions that have the resources to pay for tech support, this shouldn’t be a major issue, but for smaller local events that have a much smaller budget (if any), it is a major issue.

Let alone the BJCP Tasting Exams that are often held in the backroom of a pub without even a reliable Wi-Fi connection.

Having said that, I don’t believe digital BJCP scoresheets will be widely available anytime soon. As a competition organizer and exam administrator, I still prefer to spend some time scanning the scoresheet rather than worrying about all the technical issues that might come up during a competition or, worse, during the demanding schedule of a Tasting Exam, where beers must be served precisely every fifteen minutes.